Meta AI has unveiled Llama 4, marking a significant advancement in artificial intelligence, particularly in large language models (LLMs). Officially released on April 5, 2025, Llama 4 promises to redefine human-AI interaction through its powerful multimodal features, processing both text and images seamlessly.

This post provides a detailed examination of Llama 4, covering its release, architecture, performance, and potential applications.

Release and Context

The release of Llama 4 positions it as a timely advancement in the AI domain, following predecessors like Llama 2 and Llama 3. It appears to be a family of models, with initial releases including Llama 4 Scout and Llama 4 Maverick. A larger model, Llama 4 Behemoth, is still in training, suggesting a phased rollout to optimize performance. Behemoth is anticipated to have approximately 2 trillion total parameters.

Key Features and Architectural Innovations

Llama 4 introduces several key innovations:

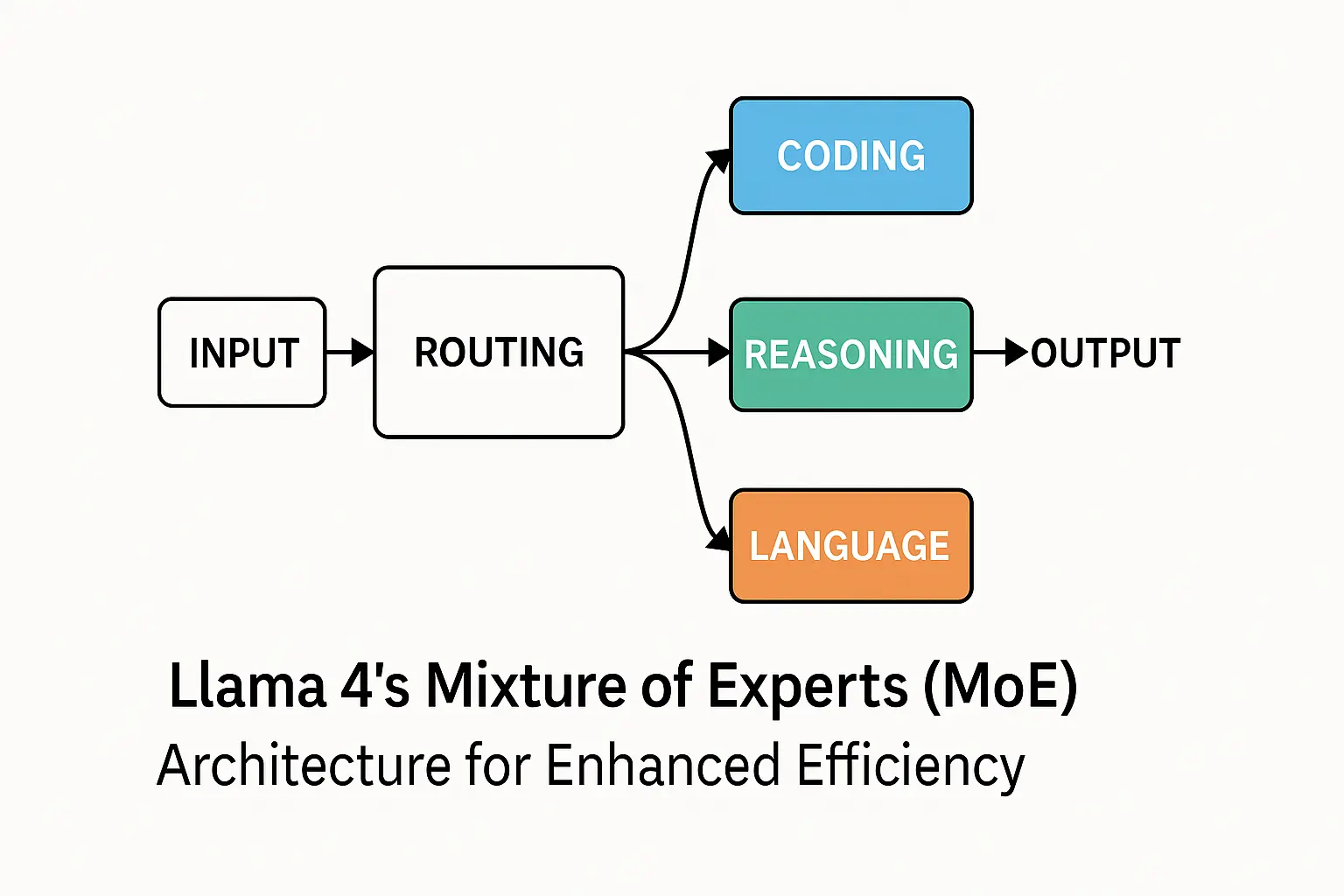

- Mixture of Experts (MoE) Architecture: This design enhances computational efficiency by activating only a specialized subset of parameters (“experts”) for each task. This balances powerful performance with reduced resource consumption.

- Scout: 109B total parameters (17B active), 16 experts.

- Maverick: 400B total parameters (17B active), 128 experts.

- Multimodality: Llama 4 models can process both text and image inputs, generating text-only outputs. This opens up new possibilities for applications like visual question answering and image-based reasoning.

- Large Context Window: The models support impressive context windows, enabling the processing of extensive data:

- Scout: Up to 10 million tokens.

- Maverick: Up to 1 million tokens. This is ideal for tasks like summarizing multiple large documents or maintaining long conversations.

- Advanced Training: Llama 4 Scout was trained on a massive 40 trillion tokens across over 200 languages, utilizing 32,000 GPUs and FP8 precision for efficiency. Meta AI has also focused on sustainability, reporting emissions data for models like Maverick (645 tons CO2eq location-based).

Performance Metrics and Benchmarks

Initial benchmarks indicate strong performance for the Llama 4 family:

- Scout: Reported to outperform competitors like Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1.

- Maverick: Shows superior performance compared to models like GPT-4o and Gemini 2.0 Flash, achieving an ELO score of 1417 on the LMArena leaderboard, highlighting its strength in coding, reasoning, and multilingual tasks.

- Behemoth (Anticipated): Expected to set new standards, potentially outperforming GPT-4.5, Claude Sonnet 3.7, and Gemini 2.0 Pro on challenging STEM benchmarks like MATH-500 and GPQA Diamond.

The efficiency gains from the MoE architecture make these powerful models more accessible for broader deployment without requiring excessive hardware.

Detailed Model Specifications

Here’s a summary of the specifications for the released Llama 4 models:

| Model | Active Parameters | Total Parameters | Experts | Context Window | Corpus Size | Training Data Cutoff |

|---|---|---|---|---|---|---|

| Llama 4 Scout | 17B | 109B | 16 | 10M | 40T | August 2024 |

| Llama 4 Maverick | 17B | 400B | 128 | 1M | 22T | August 2024 |

| Llama 4 Behemoth | 288B | ~2T | 16 | - | - | - (In Training) |

(Specifications sourced from publicly available data, primarily Wikipedia)

Scout is designed for single-GPU efficiency, while Maverick targets distributed systems.

Applications and Integration

Llama 4’s capabilities enable diverse applications:

- Enhanced Chatbots: Customer service bots that can understand and respond to text and image queries.

- Educational Tools: Combining text and visuals for richer learning experiences.

- Content Creation: Assisting with generating text based on large datasets or multimodal inputs.

- Development Tasks: Aiding developers with coding, debugging, and reasoning tasks.

Meta has already integrated Llama 4 into its platforms like WhatsApp, Messenger, and Instagram Direct, rolling it out across 40 countries.

Availability and Accessibility

Llama 4 models are accessible through various channels:

- Cloud Platforms: Available on Azure AI Foundry and Amazon SageMaker JumpStart.

- Developer Hubs: Accessible on Hugging Face with example code using the Transformers library for easy integration and fine-tuning.

- Licensing: Released under the Llama 4 Community License, permitting commercial and research use while adhering to acceptable use policies. This open-weight approach supports innovation and allows developers to tailor models for specific needs across 12+ languages.

Future Prospects and Conclusion

The ongoing development of Llama 4 Behemoth, with its potential 2 trillion parameters, signals further advancements in AI capabilities, particularly in complex reasoning, math, multilinguality, and even voice interaction. Meta AI’s strategy focuses on pushing the boundaries of open models to keep pace with closed-source competitors.

In conclusion, Llama 4 represents a pivotal moment in AI. Its blend of efficiency (MoE), advanced capabilities (multimodality, large context), strong performance, and open accessibility makes it a transformative technology. Its integration into everyday platforms and availability on major cloud services underscore its potential to reshape industries and empower developers and users worldwide.